AI-Powered Evolution in Backplane Cable Technology: How Industry Giants Like TE, Amphenol, and Molex Are Shaping the Future

Breaking the Copper Bottleneck: Hybrid Backplane Architectures Emerge

In the era of 224G/s signaling, the physical limitations of copper interconnects have become painfully evident. Traditional materials and topologies, once sufficient, are now under siege by soaring demands for bandwidth and efficiency. Hybrid backplane architectures—melding copper and optical technologies—are emerging as a compelling solution. Short-range, energy-efficient copper connections coexist with high-bandwidth, long-reach optical fibers. Modular designs mitigate operational complexity, streamlining maintenance and upgrades. TE Connectivity, Amphenol, and Molex, among other global leaders, are deploying advanced backplane cable strategies that empower AI-centric infrastructure to scale intelligently.

Legacy Foundations: The Evolution of Backplane Cable in High-Performance Systems

Backplane cables have long been the circulatory system of high-performance computing. As speeds and data densities rose, backplane systems evolved through several generations. But the ascent of next-gen AI accelerators and HPC clusters has pushed copper’s intrinsic performance to its practical ceiling. Optical pathways are now not merely optional but essential. These shifts herald a decisive rethinking of interconnect design, ushering in optical hybridization and advanced shielding techniques.

The Role of Traditional Backplanes: From Stackable Boards to Modular Design

Conventional backplane and daughtercard systems were once the gold standard of scalability. As early electronic systems demanded greater PCB real estate, engineers resorted to stacking and hand-soldering multiple boards—a cumbersome and unreliable method. Backplanes emerged to streamline this, allowing hot-swappable daughtercards for compute, storage, and I/O. This modularity simplified upgrades and maintenance while accommodating performance scaling.

Standardized interfaces such as Eurocard and CompactPCI provided the blueprint for mechanical and electrical uniformity. The backplane’s physical and logical segmentation laid the groundwork for today's high-speed modular systems.

From Edge Connectors to Shielded Contact Pairs: How Interconnects Got Smarter

As signal frequencies surged, so did the complexity of interconnects. Simple edge card connectors gave way to precision-engineered, two-piece pin-and-socket interfaces. Innovations like controlled impedance via ribbon and microstrip traces became imperative. High-layer-count PCBs (30+ layers) and exotic dielectric materials emerged to manage signal integrity parameters like insertion loss, return loss, and crosstalk.

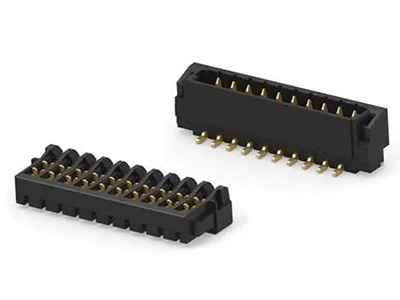

To support higher pin counts and power levels, standard 0.1-inch pitch connectors evolved into finely tuned contact systems. Differential signaling necessitated tightly coupled, shielded contact pairs. This meticulous contact geometry greatly enhanced impedance matching and electromagnetic isolation.

Innovations in Contact Design and PCB Termination

The relentless quest for signal integrity birthed technologies like twinaxial shielded contact pairs, which curbed impedance discontinuities and minimized crosstalk. Traditional through-hole soldering gave way to compliant press-fit contacts and reflow-compatible surface mounts. This allowed shorter signal paths and minimized delay between vertically staggered rows of contacts.

Connector vias underwent micro-drilling and back-drilling to eliminate stubs, further reducing signal distortion. Some designs eliminated plated-through holes entirely, embracing high-density surface-mount techniques that support thermally and mechanically challenging configurations.

Midplane and Orthogonal Architectures: Redefining Physical Layouts

To shorten signal paths and mitigate copper channel degradation, engineers introduced midplane and orthogonal architectures. In these layouts, the traditional rear-mounted backplane is repositioned to the center of the chassis. Cards insert from both sides, minimizing path lengths.

Orthogonal midplanes enable direct daughtercard-to-daughtercard connectivity, removing the backplane from the critical path entirely. However, these designs challenge airflow management and serviceability, demanding innovative thermal and structural solutions.

High-Speed Signal Integrity Challenges: The Case Against PCB-Only Traces

Signal attenuation within even short PCB traces becomes prohibitive at ultra-high speeds. In high-performance computing environments, insertion loss and skew across even minimal distances can undermine throughput and inflate power budgets. This necessitates minimizing copper trace lengths on PCBs, redirecting high-speed lanes through twinaxial cables that offer superior impedance control and electromagnetic isolation.

By terminating cables near chipsets and routing them directly to I/O interfaces, engineers dramatically reduce signal degradation. This principle has become foundational for modern high-speed chassis designs.

Cabled Backplanes: The Logical Next Step

Extending the cable-based concept, cabled backplanes substitute traditional copper I/O lanes with high-performance twinaxial cabling. Slower-speed and power traces remain on the copper backplane, while high-speed channels leapfrog via direct-cable routing. IEEE’s 40dB insertion loss guideline frames the boundary for copper’s viability, beyond which twinaxial and optical become the go-to mediums.

Cabled backplanes are already prevalent in top-tier HPC systems, where performance outweighs cost. Their adoption is widening as interconnect complexity and bandwidth demands explode.

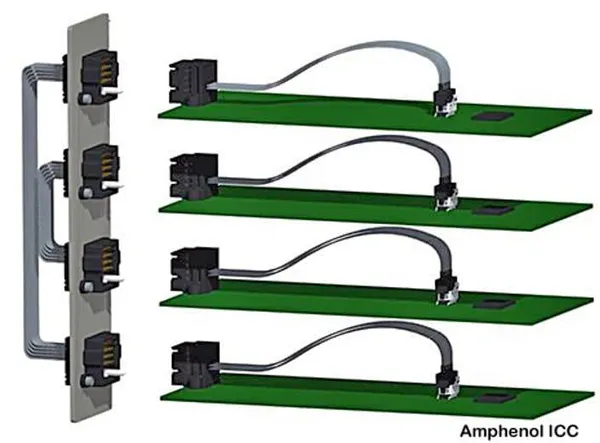

Amphenol’s High-Performance Cabled Solutions

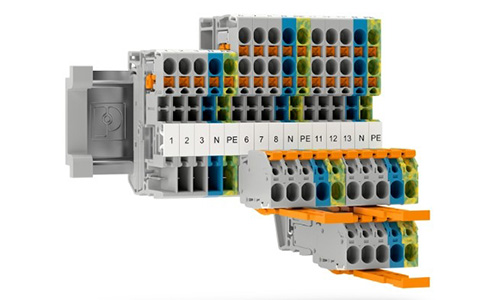

Amphenol offers elite cabled backplane configurations leveraging its Paladin HD2 connector system, optimized for twinaxial ribbon and discrete cables. High-speed differential signals traverse precision-matched harnesses, while power and low-speed I/O use conventional connectors. Rack-level power distribution often relies on robust busbars, delivering kilowatts per node.

Guiding posts ensure proper alignment between backplane connectors and mating servers, crucial for minimizing wear and signal mismatch.

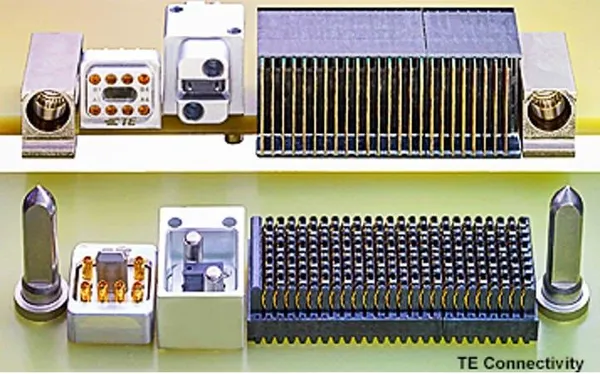

Advanced Connectors for 224G and Beyond

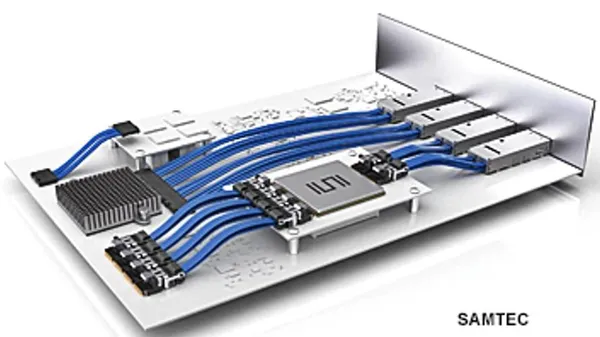

Modern AI and HPC systems rely on a new class of connectors engineered for stratospheric bandwidths. Offerings like TE’s Adrenaline Slingshot, Samtec’s NovaRay®, and Molex’s Inception meet or exceed 224G/s specifications. These interconnects balance signal integrity with mechanical resilience, enabling next-gen compute density in tight enclosures.

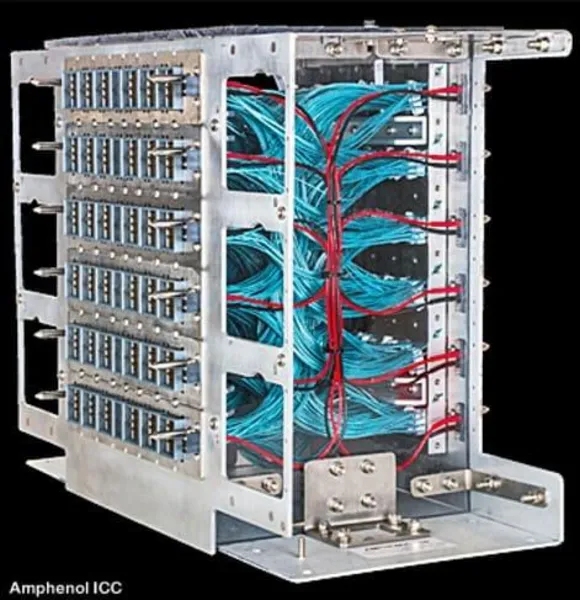

The Complexity of Early Cable Backplanes—and the Rise of Modularity

Legacy cable backplanes, often a chaotic tangle of twinaxial assemblies, proved unmanageable. Troubleshooting faults required navigating a labyrinthine web, hindering serviceability. The remedy? Modularization.

Modular cable boxes encapsulate high-speed interconnects within pluggable, pre-tested units. Large modules demand sturdy mechanical alignment systems and tighter rack tolerances. These boxes are tailored to specific use cases and are factory-tested for flawless deployment.

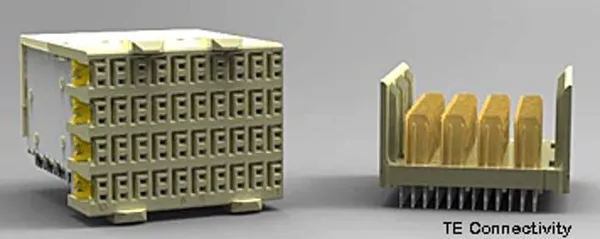

TE’s Boxed Cable Backplanes: Purpose-Built for Hyperscale

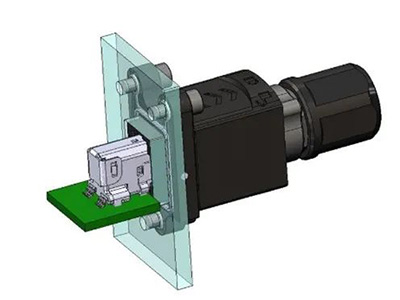

TE Connectivity showcased a boxed cable backplane solution for hyperscale servers and switches. Installed at the rear of the chassis, these assemblies offer plug-and-play integration, signal integrity assurance, and thermal efficiency. The design prioritizes field-replaceability while maintaining electrical excellence.

NVIDIA’s Radical Approach: Copper-Only, Cable-Centric Design

When NVIDIA engineered its DGX GB200 NVL72 AI supercomputer, it bypassed PCB backplanes entirely. At the heart of the system lies the NVSwitch chip with 50 billion transistors, demanding an interconnect strategy of unprecedented scale and fidelity. The solution: 5,000 twinaxial NVLink cables stretching over two miles—consolidated into four vertical NVLink trays.

While fiber optics offer density and speed, their power draw would have ballooned system consumption by 40 megawatts due to 2.4 million required transceivers. Copper, optimized for signal integrity, prevailed as the pragmatic choice.

From Cable to Light: The Future Is Silicon Photonics

Despite copper’s current dominance, optical interconnects loom large on the horizon. As rack-to-rack bandwidth demands cross the 224G/s threshold, the physical reach of copper shortens. Future systems may require optical links for anything beyond half-rack distances.

NVIDIA has already committed to implementing silicon photonics in its flagship platforms. The recently unveiled Quantum-X800 switch integrates CPO (co-packaged optics) technology, leveraging 144 MPO cables to deliver 115 Tb/s—heralding the optical era.

Conclusion: A Transcendent Shift in Backplane Design

As AI systems race toward ever-higher data rates, the limitations of copper signal paths force the hand of innovation. Hybrid cabled backplanes, modular optical extensions, and advanced shielding are no longer just performance enhancers—they are foundational to the AI datacenter architecture. With companies like TE, Amphenol, and Molex forging the interconnects of tomorrow, the future of backplane design will not only support the demands of AI but shape its trajectory.

Shenzhen Gaorunxin Technology Co., Ltd